Are RGB Cameras Becoming Obsolete?

The RGB (red-green-blue) system of color imaging is so well established that it may seem to be the only option. There are practical and theoretical reasons, however, to consider whether RBG cameras might soon become obsolete. The RGB or ("trichromatic") system dates back to the first ever color photograph, taken with grey-scale film using red, green and blue filters by physicist, James Clerk Maxwell, and photographer, Thomas Sutton, in 1852. Then and now, the system successfully fools humans into believing they see all colors of the spectrum when they are actually seeing different combinations of red, green and blue. A spectrometer, for example, readily distinguishes a combination of red and green from true yellow, but humans cannot.

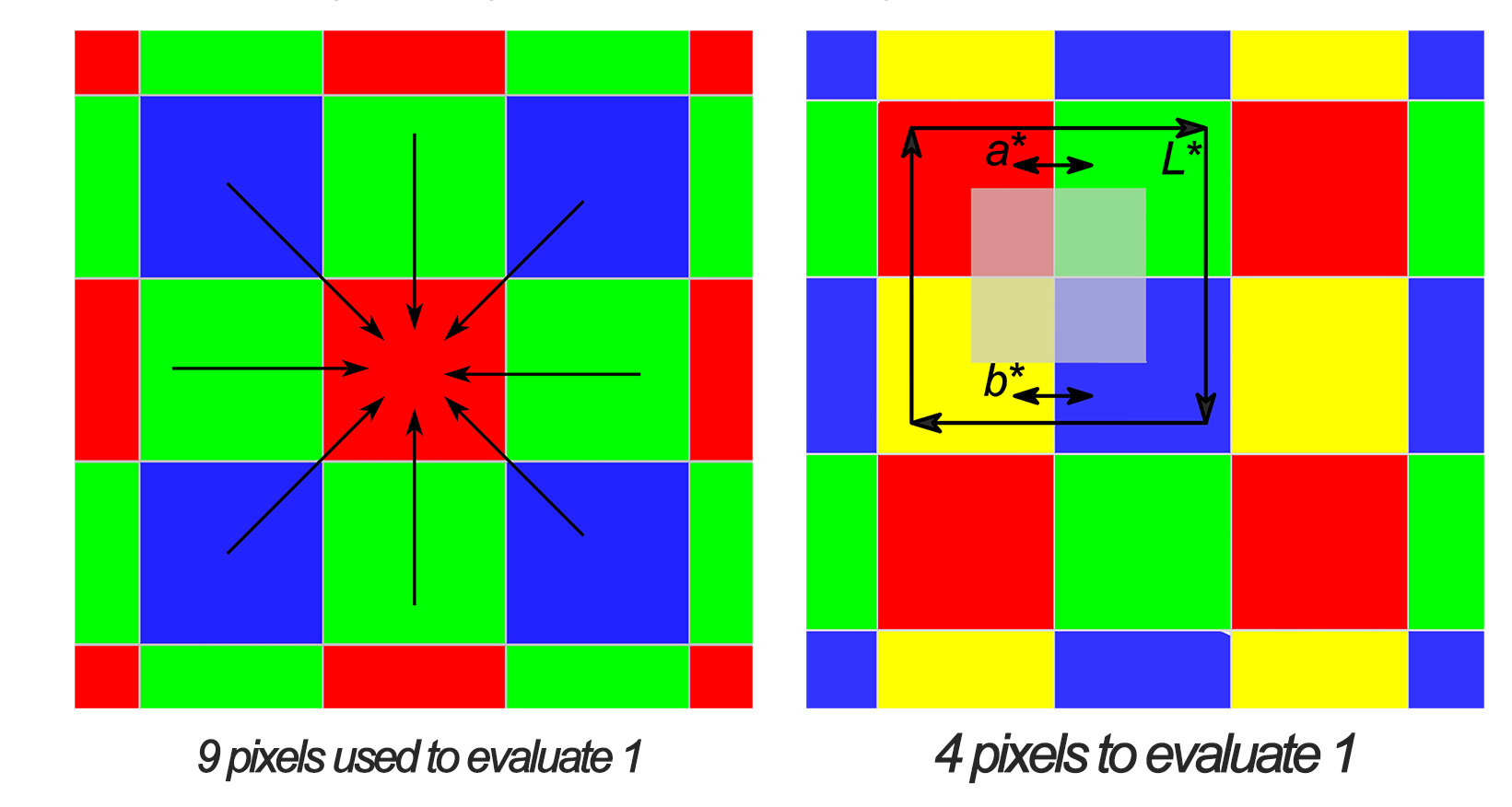

Modern digital cameras still use an array of red, green and blue filters to record light intensities onto a broad-spectrum sensor. A 4 MB camera generally does have 4,000,000 locations for which to record light intensity, but 1 million are captured through red filters, 2 million through green filters, and 1 million through blue filters. The camera's electronics CALCULATE separate red, green and blue intensities for each of the 4 million pixels, but do so by interpolating values for adjoining pixels. Each pixel's color data actually incorporates data from NINE (9) pixels.

Way back in 1892, physiologist Ewald Hering offered an alternative theory of human color vision, called "Opponent Process Theory." He cited experiments which trichromatic theory could not explain, including "afterimages" which linger in our field of view. If we stare at a blue spot for 20 seconds, for example, then look at a white background, we generally see a yellowish afterimage. To explain these observations, Hering suggested that the information transmitted to our brains is NOT the full set of RGB intensities, but rather a red-green comparison (a*), a yellow-blue comparison (b*) and a measure of overall brightness (L*).

Herring's theory lives on. Most modern experts believe that trichromatic theory does provide an accurate description of how our EYES function, but that Herring's theory provides a better description of how our BRAINS actually interpret color. Herring's L*a*b* system is sufficiently well established that it is available in programs such as Photoshop as an alternative method for manipulating color images.

Display devices such as TVs and computer monitors do need to produce images that match the functioning of the human eye, so they may always need to use RGB displays. There is no fundamental reason, however, that cameras need to capture all the same data our eyes capture and then discard. Cameras could capture values for L*, a* and b* directly--and the result might be both better color quality and better spatial resolution.

Will RGB digital cameras really become obsolete? Maybe, maybe not. But it is a question worth considering--and that's what our website is all about.